Data Science and the Jupyter Notebook Environment

March 01, 2018 | Lee Liming

If you’re not already using Jupyter notebooks in your research or teaching, you might want to take a look.

Our vision at Globus is to vastly improve the overall research data management experience, and the Globus service/platform is the most visible result of our work. But our interest is actually much broader. Our team members are constantly visiting and listening to members of the research community who have data challenges and learning whatever we can. We know that Globus by itself won’t satisfy every challenge and there are other teams working toward similar goals. So as we build Globus, our strategy is offer solutions in ways that complement everyone else’s work.

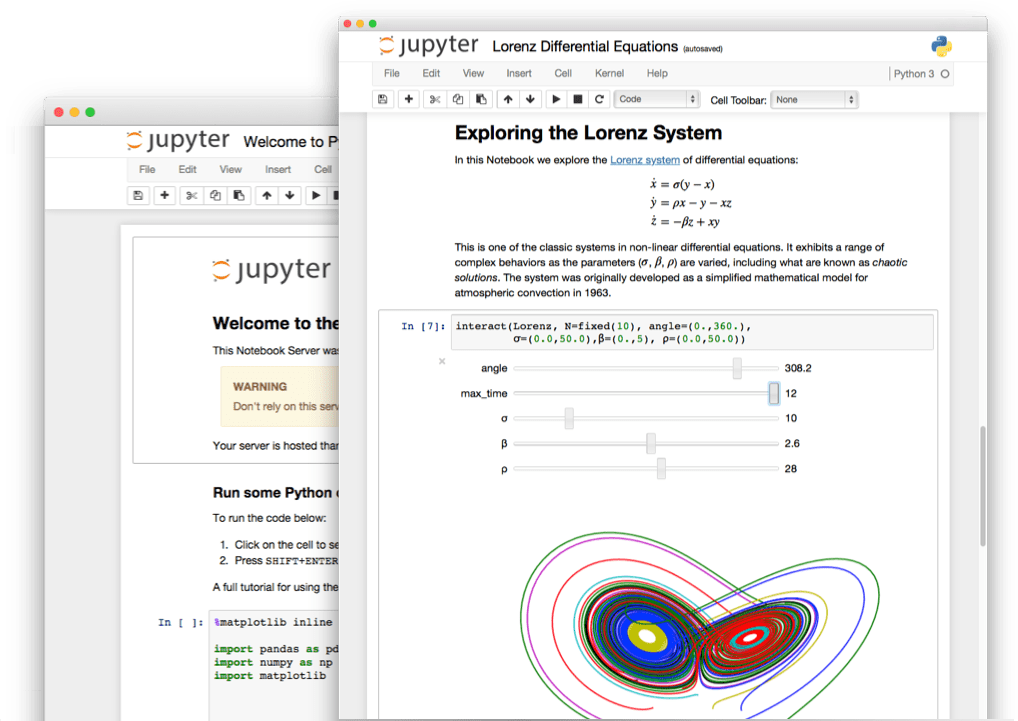

One exciting trend we’ve observed over the years has been the movement toward computational notebooks. A computational notebook is a document-creation environment (like a text editor or a word processor) connected to a live computation engine. When you add a line of code to your document, you (or your readers) can execute that code and see the results within the document. For a researcher, it’s a way to record your activities as you work with code and data. For the reader, it’s a way to retrace the author’s steps, and possibly try alternate paths. For a data scientist, it can be a way to try out data processing steps interactively until the data is in just the right format, with just the right view, with all of the steps taken to get there laid out for review.

Project Jupyter is one of the most recent efforts to implement and promote computational notebooks within the research and education community. Standing on the shoulders of earlier efforts (Mathematica, MATLAB, E-Notebooks), it’s become quite popular among data scientists, software developers, and educators. Jupyter’s notebook environment--where work is organized into documents that can be organized, saved, copied, and shared with others--is naturally suited to many kinds of workflows and teaching methods.

Jupyter is open source and free to use, and it works well with more than 40 programming and data science languages including Python, R, Julia, and Scala. It works in a Web browser, but you can run the server on your own workstation or laptop. It can work with “big data” tools on cloud and HPC systems, and it allows notebook sharing using familiar services like Dropbox, GitHub, and email.

A lot of useful and creative work is being done in the Jupyter notebook environment - for example:

- The LIGO Open Science Center uses Jupyter notebooks in their tutorials on detecting gravitational waves. (LIGO won the 2017 Nobel Prize in Physics.)

- Monica Bobra and Stathis Ilonidis published an article on predicting coronal mass ejections using machine learning in Astrophysical Journal with an accompanying notebook reproducing all of their data results.

- Brian Keegan, a researcher of media and technology at Northeastern University, published a live analysis of whether or not films that pass the Bechdel test make more money for their producers.

- Jeremy Singer-Vine, a journalist, published a notebook analyzing the segregation of St. Louis County, MO.

- And of course, we use notebooks to demonstrate Globus for application developers, for example how to build a modern research data portal.

The Jupyter GitHub repository lists many more compelling examples of notebooks used in science, humanities, and software development.

Jupyter can be run in a range of ways. The simplest is to install and use a private copy on your own workstation or laptop. Or, you could install a JupyterHub, a multi-user server, on a department or cloud server and let others (e.g. students, research colleagues, project team members) create and share notebooks. (If you run a JupyterHub for a team, you’re a Software-as-a-Service provider!)

The next generation of Jupyter is JupyterLab, a more integrated desktop environment that provides access to widgets such as terminal sessions, text editors, notebooks, and custom components. Widgets can be arranged using tabs and splitters. We’re very excited about JupyterLab because it’s highly extensible, allowing third-party developers (like us) to add custom components. JupyterLab is currently available as a beta release and the 1.0 version is expected later this year.

Having seen the exciting and innovative things people are doing with research data in the Jupyter environment, we’ve been keen to ensure that Globus works well there, too.

Naturally, we want people crafting notebooks to be able to use Globus to import and export data: the importance of data access within a data analysis environment is hard to overstate! But there’s another reason to use Globus in a Jupyter notebook: Globus can provide something that the Web protocols used in most notebooks can’t: negotiation of access policies and access control. Put simply, Globus’s identity and authentication services offer the possibility for Jupyter users to use protected or restricted data--not just public data--in their notebooks. Globus’s security services combine the commercial Web’s OpenID Connect mechanism with the academic community’s InCommon federation. Integrating these features with Jupyter, JupyterHub, and JupyterLab has been a particular interest of ours, and one that will be covered in our next blog post on this topic.

We see strong synergy between Globus and the Jupyter project, and we’re learning what we must do to ensure that researchers, data analysts, educators, students, and writers have the best possible experience using our services in the Jupyter environment.